As we hurtle towards an AI-driven future, a significant paradigm shift is on the horizon - one that could fundamentally alter how we access and utilize artificial intelligence across international borders. Drawing on my 30 years of experience in evolving information systems for large-scale international efforts, I see striking parallels between our current AI trajectory and the restrictions placed on cryptography in the recent past.

The Crypto Precedent

Many may not remember, but cryptography was once classified as munitions under US law. Throughout the 1990s and early 2000s, exporting high-end encryption carried severe restrictions and penalties. This historical precedent offers a glimpse into how emerging technologies with significant security implications can be tightly controlled.

The AI Security Conundrum

With the myriad of security concerns surrounding AI use, it seems inevitable that we'll soon witness severe restrictions on access and use across borders. As AI models advance, we might even see the implementation of safety pre-screening or a civilian-like security clearance process to restrict the use of deep intelligence in these models.

While I've always been a staunch advocate for open information sharing and open data, even I must concede that such measures may be necessary. The dangers of misinformation alone are concerning, let alone the more sinister potential applications in cybersecurity and biosecurity.

Beyond Security: The Liability Factor

Security isn't the only driver behind potential AI restrictions. A perhaps more forceful motivator will be liability concerns. The liability of an AI company in allowing for the creation of harmful artifacts remains completely untested in the US court system, let alone in international law.

We're already seeing the impact of existing liability issues related to privacy and personal data. Meta's announcement of limitations in accessing its AI throughout the EU due to GDPR concerns is just the tip of the iceberg. Several other companies are contemplating similar moves.

The China Model

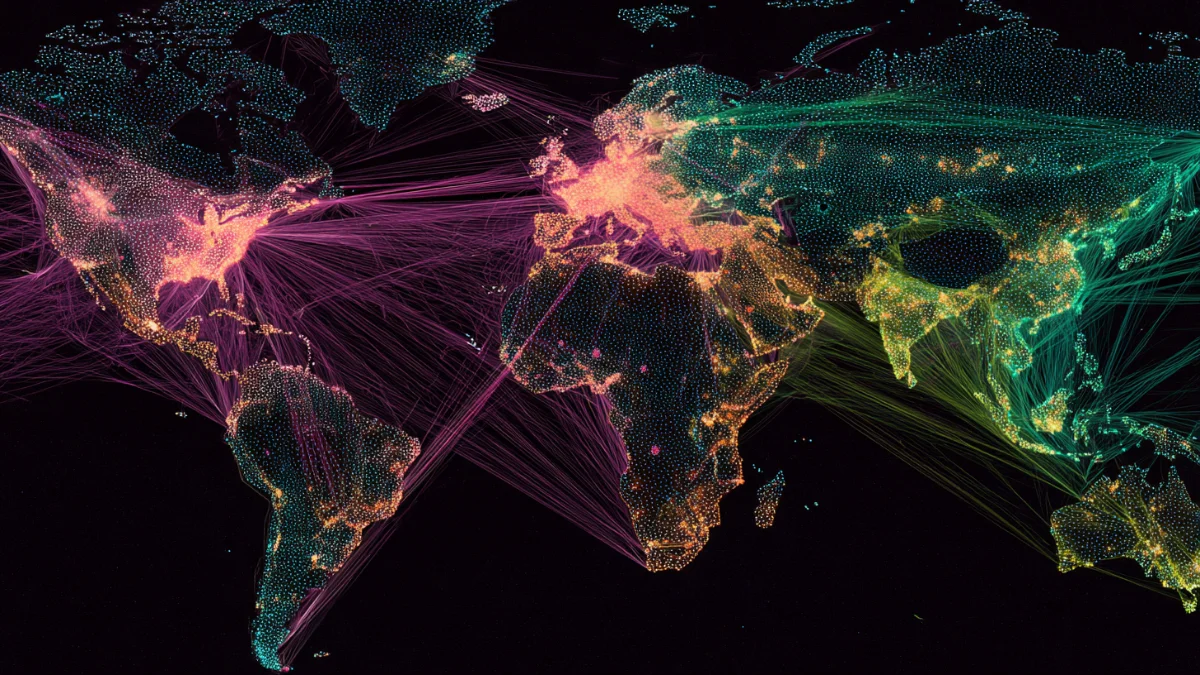

China has implemented severe restrictions on AI access within its borders and is likely to pursue a China-only version of AI, mirroring its approach to social media and search engines. As comprehensive AI regulations emerge internationally, we can expect this trend of national AI ecosystems to proliferate.

Humanitarian Implications

In the context of providing humanitarian aid with AI, these restrictions could prove severely limiting. I've seen firsthand the potential for AI to revolutionize humanitarian and climate action. Well-implemented AI has the potential to improve outcomes for people globally by providing unimagined levels of targeted improvements in everything from planning efficiency and targeted responses, to individualized interventions at scale. The Humanitarian AI Assistant I helped develop, GANNET is just one step of many across the sector to leverage AI for global good. I hope to see these benefits realized at a more rapid pace.

However, the emerging landscape of AI restrictions presents a significant challenge. Crises can happen anywhere in the world, and the global differentiation in AI access could hamper the efficacy of tools brought to bear. For global NGOs, navigating these disparate regulations during a multi-country crisis could be treacherous.

As international NGOs begin to tap into emerging AI trends, the potential impact of international restrictions and regulations are at the top of our minds. The promise of AI in humanitarian work is immense - it could enhance our ability to predict and respond to disasters, optimize resource allocation, and provide personalized assistance to those in need. Yet, if access to these tools becomes fragmented along national lines, it could create a new form of digital divide in humanitarian response capabilities.

For instance, an NGO might develop an AI-powered system for early warning and rapid response in one country, only to find it cannot deploy the same system in another due to differing AI regulations. This could lead to inconsistent levels of aid and support across regions, potentially exacerbating existing inequalities in humanitarian assistance.

Moreover, the restrictions could hinder the collaborative nature of humanitarian work. Many crises require coordinated efforts across multiple countries and organizations. If each entity is working with different AI capabilities due to varying national regulations, it could complicate coordination efforts and reduce overall effectiveness. The implications of differentially limited information for the safety of humanitarian workers in real world scenarios is dire.

It's crucial for those of us in the humanitarian sector to engage in dialogue with policymakers. We need to advocate for regulatory frameworks that balance legitimate security concerns with the need for AI tools that can enhance our capacity to help those in need, regardless of where they are in the world.

Enforcement Models: A Paradigm Shift

The most likely models of enforcing these restrictions represent one of the biggest paradigm shifts in global regulation we'll see in our lifetimes. Two primary models are emerging, and we may well end up with some form of both:

Hardware-Level Restrictions: OpenAI's May 3rd post, "Reimagining security infrastructure for advanced AI," recommended building strong encryption directly into GPUs and registering both the chips and users with a governmental agency. This agency would have the power to ban users from using these chips for AI altogether.

Content Monitoring: OpenAI announced the appointment of retired general Paul Nakasone, former director of the National Security Agency, hints at a different approach. The NSA's expertise in cybersecurity and surveillance suggests we might see AI systems monitoring prompts sent to LLMs, alerting officials to potentially dangerous or illegal chains of thought.

The Self-Enforcing Paradigm

This new landscape goes beyond just liability and bureaucratic regulatory systems. We're moving towards a self-enforcing mechanism to uphold this paradigm. This represents a massive departure from previous systems, which relied on the slow pace of international law and the difficulties of regulatory enforcement to balance misaligned policies.

We're rapidly approaching an environment where these policies will be self-regulating from day one, potentially becoming a fundamental part of the hardware architecture itself.

Conclusion

As we stand at this crossroads, it's crucial to engage in serious debate about the implications of these changes. If you don't see the need for a thorough discussion of both sides, you may not fully grasp the complexity of the problem.

The future of AI isn't just about technological advancement, it's about how we navigate the intricate interplay of innovation, security, and international cooperation. As the technology rapidly develops, we have to balance the potential for global good and global economic development, with the dangers of global disruption presented by AI. As professionals in the tech industry, it's our responsibility to stay informed and contribute to this vital dialogue.

What are your thoughts on this emerging landscape? How do you see it impacting your industry or area of expertise? Let's continue this crucial conversation in the comments below.